If the company you work for is like mine, your specifications are a word document produced by someone who barely knows the technology, or by someone who knows the technology and not the business. Also, after a time, the specifications become out of date. If there are samples within the specification, these become out of date as well.

So, how do you know if a specification is out of date? Well, usually you have to manually check these, which usually does not happen.

Another problem I have come across where specifications are done through word documents is that the examples given are inaccurate. This can make things difficult for the developer to know what the requirement is. They then “guess” an interpret the specification in their own way.

Written specifications are up for interpretation.

For example, I tried Gojko’s Star experiment with my team. As expected, everyone in the team answered 10. When I asked “Why not 5?” an arguement ensured, but a few got the point. “Specifications can be subjective!”

So how do you get away from the subjectiveness? Well, there are a lot of different practices at the moment, Test Driven Development (TDD), Behaviour Driven Development (BDD), and Acceptance Test Driven Development (ATDD). There are probably a few more that I have left out, but all these methods have something in common. They document your specification by using tests. Tests are verifiable and measurable. If a test fails, the specification is not met. (You can get into the case that the test is wrong in the first place, but that is for a later discussion).

The documentation of these tests can also take different forms. For example with TDD, tests are specified using traditional means such as using JUnit for Java. These tests/specifications are tightly coupled to the code they are testing/designing. This is fine if the audience such as other developers are the target, but for business analysts, project managers and other management are the audience, they may get a little bamboozled.

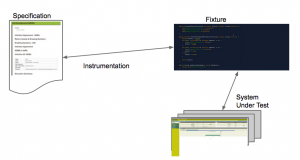

This is where ATDD and BDD come in. These methodologies use natural language, be it English or any other native language to describe the the specification. Bridging code known as a fixture then takes these specifications, takes the examples written within the spec and executes tests.

This methodology provides two way verification. You can check if your system meets the specification. You can also see if the specification accurately describes the system.

Any changes that create a failing test, immediately lets you know if the system has a changed or the specification has changed.

So, how are these types of specifications written?

There are a number of methods, but the major types are…

- Gerkin based, these are used by products such as JBehave, Cubumber, EasyB etc. These use the “Given, When, Then” terminology used to describe user stories in text files and then have a bridge to convert the scenarios to tests.

- Table Based, these are used by Fitnesse and Concordion. Fitnesse uses a wiki to write the specification using 2 types of languages. The older FIT and the newer SLIM. Concordion uses HTML and the <span> tag to mark entries to be used by the fixtures.

I haven’t had a chance yet to play with the Gerkin based products as yet. But I have done a couple of POCs with Fitnesse and Concordion.

Each had their own advantages and disadvantages, but overall my impression was that these provided a better means to get better specifications than a simple word document.